A Mechanism For Simd Execution Of Spmd Programs

Introduction The open source based (commonly referred to in previous documents as ISPC) is not a replacement for the Gnu. Compiler Collection (GCC) or the Microsoft. C compiler; instead it should be considered more akin to a shader compiler for the CPU that can generate vector instructions for a variety of instruction sets such as Intel® Streaming SIMD Extensions 2 (Intel® SSE2), Intel® Streaming SIMD Extensions 4 (Intel® SSE4), Intel® Advanced Vector Extensions (Intel® AVX), Intel® AVX2, and so on.

The input shaders or kernels are C-based and the output is a precompiled object file with an accompanying header file to be included in your application. Through the use of a small number of keywords, the compiler can be explicitly directed on how the work should be split across the CPU vector units. The extra performance from explicit vectorization is available if a developer chooses to write intrinsics directly into their codebase, however, this has a high complexity and high maintenance cost. Intel SPMD Program Compiler kernels are written using a high-level language so the development cost is low.

A SPMD Compiler for High-Performance CPU Programming Matt Pharr. SPMD on SIMD Execution. SPMD program instances. SPMD vs SIMD In SPMD, multiple. Presents the programmer with a common memory space and the possibility to parallelize execution by having the program take.

It also becomes trivial to support multiple instruction sets to provide the best performance for the CPU that the code is running on, rather than the lowest common denominator, such as Intel SSE4. This article does not aim to teach the reader how to write Intel SPMD Program Compiler kernels; it simply demonstrates how to plug Intel SPMD Program Compiler into a Microsoft Visual Studio.

solution and provides guidance on how to port simple High-Level Shading Language. (HLSL.) compute shaders to Intel SPMD Program Compiler kernels.

For a more detailed overview of Intel SPMD Program Compiler, please refer to the online. The example code provided with this article is based on a modified version of the that has been ported to support Intel SPMD Program Compiler vectorized compute kernels. It is not intended to show performance deltas against the GPU, but to show the large performance gains that can be achieved when moving from standard scalar CPU code to vectorized CPU code. While this application clearly does not represent a game due to the very light CPU load in the original sample, it does show the kind of performance scaling possible by using the vector units on multiple CPU cores. Screenshot from the modified n-Body Gravity sample The Original DirectX.

12 n-Body Gravity Sample Before starting the port to Intel SPMD Program Compiler, it would be useful to understand the original sample and its intent. The DirectX 12 n-Body Gravity sample was written to highlight how to use the separate compute engine in DirectX 12 to perform asynchronous compute; that is, the particle render is done in parallel to the particle update, all on the GPU. The sample generates 10,000 particles and updates and renders them each frame. The update involves every particle interacting with every other particle, to generate 100,000,000 interactions per simulation tick.

The HLSL compute shader maps a compute thread to each particle to perform the update. The particle data is double-buffered so that for each frame, the GPU renders from buffer 1 and asynchronously updates buffer 2, before flipping the buffers in preparation for the next frame. Pretty simple, and a good candidate for an Intel SPMD Program Compiler port because an asynchronous compute task lends itself perfectly to being run on the CPU; the code and engine have already been designed to perform the compute in a concurrent execution path, so by transferring some of this load onto the often underutilized CPU, the GPU can either finish its frame quicker, or can be given more work, while making full use of the CPU. Porting to Intel® SPMD Program Compiler The recommended approach would be to port from HLSL to scalar C/C first. This ensures that the algorithm is correct and produces the correct results, interacts with the rest of the application correctly and, if applicable, handles multiple threads properly. As trivial as this sounds, there are a few things to consider:.

How to share memory between the GPU and CPU. How to synchronize between the GPU and CPU. How to partition the work for single instruction/multiple data (SIMD) and multithreading. Porting the HLSL code to scalar C. Porting scalar C to an Intel SPMD Program Compiler kernel. Some of these are easier than others. Sharing Memory We know we need to share the memory between the CPU and the GPU, but how?

Fortunately, DirectX 12 provides a few options, such as mapping GPU buffers into CPU memory, and so on. To keep this example simple and to minimize code changes, we just re-use the particle upload staging buffers that were used for the initialization of the GPU particle buffers, and we create a double-buffered CPU copy for CPU access. The usage model becomes:.

Update CPU-accessible particle buffer from the CPU. Call the DirectX 12 helper UpdateSubresources using the original upload staging buffer with the GPU particle buffer as the destination. Bind the GPU particle buffer and render. Synchronization The synchronization falls out naturally as the original async compute code already has a DirectX 12 Fence object for marshalling the interaction between the compute and render, and this is simply reused to signal to the render engine that the copy has finished. Partitioning the Work To partition the work, we should first consider how the GPU partitions the work, as this may be a natural fit for the CPU. Compute shaders have two ways to control their partitioning.

First is the dispatch size, which is the size passed to the API call when recording the command stream. This describes the number of and the dimensionality of the work groups to be run. Second is the size and dimensionality of the local work group, which is hard coded into the shader itself. Each item in the local work group can be considered a work thread and each thread can share information with other threads in the work group if shared memory is used. Looking at the nBodyGravityCS. Hlsl compute shader, we can see that the local work group size is 128 x 1 x 1 and it uses some shared memory to optimize some of the particle loads, but this may not be necessary on the CPU. Other than this, there is no interaction between the threads and each thread works on a different particle from the outer loop while interacting with all other particles in the inner loop.

This seems a natural fit to the CPU vector width, so we could swap the 128 x 1 x 1 with 8 x 1 x 1 for Intel AVX2 or 4 x 1 x 1 for Intel SSE4. We can also use the dispatch size as a hint for how to multithread the code, so we could divide the 10,000 particles by 8 or 4, depending on the SIMD width. But, because we have discovered that there is no dependency between each thread, we could simplify it and just divide the number of particles by the available number of threads in the thread pool, or available logical cores on the device, which would be 8 on a typical quad core CPU with Intel® Hyper-Threading Technology enabled. When porting other compute shaders, this may require more thought. This gives us the following pseudocode: For each thread Process N particles where N is 10000/threadCount For each M particles from N, where M is the SIMD width Test interaction with all 10000 particles Porting HLSL. to Scalar C When writing Intel SPMD Program Compiler kernels, unless you are experienced, it is recommended that you have a scalar C version written first. This will ensure that all of the application glue, multithreading, and memory operations are working before you start vectorizing.

To that end, most of the HLSL code from nBodyGravityCS.hlsl will work in C with minimal modifications other than adding the outer loop for the particles and changing the shader math vector types to using a C-based equivalent. In this example, float4/float3 types were exchanged for the DirectX XMFLOAT4/XMFLOAT3 types, and some vector math operations were split out into their scalar equivalents. The CPU particle buffers are used for reading and writing, and then the write buffer is uploaded to the GPU as described above, using the original fences for the synchronization. To provide the threading the sample uses Microsoft’s concurrency::parallelfor construct from their. The code can be seen in D3D12nBodyGravity::SimulateCPU and D3D12nBodyGravity::ProcessParticles. Once the scalar code is working, it is worth doing a quick performance check to ensure there are no algorithmic hot spots that should be fixed before moving to Intel SPMD Program Compiler.

In this sample, some basic hot spot analysis with tools highlighted that a reciprocal square root (sqrt) was on a hot path, so this was replaced with the infamous approximation from Quake. that provided a small performance improvement with no perceivable impact due to the loss of precision. Porting Scalar C to Scalar Intel® SPMD Program Compiler Once your build system has been modified to build Intel SPMD Program Compiler kernels and link them into your application (Microsoft Visual Studio modifications are described later in this article), it is time to start writing Intel SPMD Program Compiler code and hook it into your application. Hooks To call any Intel SPMD Program Compiler kernels from your application code, you need to include the relevant auto-generated output header file and then call any of the exported functions as you would any normal library, remembering that all declarations are wrapped in the ispc namespace. In the sample, we call ispc::ProcessParticles from within the SimulateCPU function.

Parallel Computing

Vector Math Once the hooks are in, the next step is to get scalar Intel SPMD Program Compiler code working, and then vectorize it. Most of the scalar C code can be dropped straight into an Intel SPMD Program Compiler kernel with only a few simple modifications.

In the sample, all vector math types needed defining because, although Intel SPMD Program Compiler does provide some templated vector types the types are only needed for storage, so new structs were defined. Once done, all XMFLOAT types were converted to the Vec3 and Vec4 types. Keywords We now need to start decorating the code with some Intel SPMD Program Compiler specific keywords to help direct the vectorization and compilation. The first keyword is export, which is used on a function signature like a calling convention, to inform Intel SPMD Program Compiler that this is an entry point into the kernel. This does two things. First, it adds the function signature to the autogenerated header file along with any required structs, but it also puts some restrictions on the function signature, as all arguments need to be scalar; which leads us to the next two keywords to be used, varying and uniform. A uniform variable describes a scalar variable that will not get shared, but its contents will be shared across all SIMD lanes, while a varying variable will get vectorized and have unique values across all SIMD lanes.

All variables are varying by default so whilst the keyword can be added, it has not been used in this sample. In our first pass of creating a scalar version of this kernel, we will decorate all variables with the uniform keyword to ensure it is strictly scalar.

Intel SPMD Program Compiler Standard Library Intel SPMD Program Compiler provides a containing many common functions which can also aid the port, including functions like floatbits and intbits, which are required for some of the floating point casts required in the fast reciprocal sqrt function. Vectorizing the Intel SPMD Program Compiler Kernel When the Intel SPMD Program Compiler kernel is functioning as expected, it is time to vectorize. The main complexity is normally deciding what to parallelize and how to parallelize it. A rule of thumb for porting GPU compute shaders is to follow the original model of GPU vectorization which, in this case, had the core compute kernel invoked by multiple GPU execution units in parallel. So, where we added a new outer loop for the scalar version of the particle update, it is this outer loop that should most naturally be vectorized. The layout of data is also important, as scatter/gather operations can be expensive for vector ISAs (although this is improved with the Intel AVX2 instruction set), so consecutive memory locations are normally preferred for frequent loads/stores. Parallel Loops In the n-body example, this rule of thumb was followed and the outer loop was vectorized, leaving the inner loop scalar.

Therefore, 8 particles would be loaded into the Intel AVX registers and all 8 would then be tested against the entire 10,000 particles. These 10,000 positions would all be treated as scalar variables, shared across all SIMD lanes with no scatter/gather cost. Intel SPMD Program Compiler hides the actual vector width from us (unless we really want to know), which provides a nice abstraction to transparently support the different SIMD widths for Intel SSE4 or Intel® Advanced Vector Extensions 512 (Intel® AVX-512), and so on.

The vectorization was done by replacing the outer for loop with an Intel SPMD Program Compiler foreach loop, which directs Intel SPMD Program Compiler to iterate over the range in N-sized chunks, where N is the current vector width. Hence, whenever the foreach loop iterator ii is used to dereference an array variable, the value of ii will be different for each SIMD lane of the vector, which allows each lane to work on a different particle. Data Layout At this point, it is important to briefly mention data layout. When using vector registers on the CPU it is important that they are loaded and unloaded efficiently; not doing so can cause a big performance slowdown. To achieve this, vector registers want to have the data loaded from a structure of arrays (SoA) data source, so a vector width number of memory-adjacent values can be loaded directly into the working vector register with a single instruction. If this cannot be achieved, then a slower gather operation is required to load a vector width of non-adjacent values into the vector register, and a scatter operation is required to save the data out again.

In this example, like many graphics applications, the particle data is kept in an array of structures (AoS) layout. This could be converted to SoA to avoid the scatter/gather, but due to the nature of the algorithm, the scatter/gather required in the outer loop becomes a small cost compared to processing the 10,000 scalar particles in the inner loop, so the data is left as AoS.

Vector Variables The aim is to vectorize the outer loop and keep the inner loop scalar, hence a vector width of outer loop particles will all be processing the same inner loop particle. To achieve this, we load the position, velocity, and acceleration from the outer loop particles into vector registers by declaring pos, vel, and accel as varying.

This was done by removing the uniform decoration we added to the scalar kernel, so Intel SPMD Program Compiler knows these variables require vectorizing. This needs propagating through the bodyBodyInteraction and Qrsqrt functions to ensure they are all correctly vectorized. This is just a case of following the flow of the variables and checking for compiler errors. The result is that Qrsqrt is fully vectorized and bodyBodyInteraction is mainly vectorized, apart from the inner loop particle position thatPos, which is scalar. This should be all that is required, and the Intel SPMD Program Compiler vectorized kernel should now run, providing good performance gains over the scalar version. Performance The modified n-body application was tested on two different Intel CPUs and the performance data was captured using to record the frame times from three runs of 10 seconds each, which were then averaged. This showed performance scaling in the region of 8–10x from scalar C/C code to an Intel AVX2 targeted Intel SPMD Program Compiler kernel.

Both devices used Nvidia. 1080 GTX GPUs and used all available CPU cores.

Processor Scalar CPU Implementation Intel® AVX2 Implementation Compiled with Intel® SPMD Program Compiler Scaling Intel® Core™ i7-7700K processor 92.37 ms 8.42 ms 10.97x Intel Core I7-6950X Processor Extreme Edition brand 55.84 ms 6.44 ms 8.67x How to Integrate Intel SPMD Program Compiler into Microsoft Visual Studio. Ensure the Intel SPMD Program Compiler compiler is on the path or easily located from within Microsoft Visual Studio. Include your Intel SPMD Program Compiler kernel into your project. It will not be built by default as the file type will not be recognized. Right-click on the file Properties to alter the Item Type to be a Custom Build Tool:. Click OK and then re-open the Property pages, allowing you to modify the custom build tool.

Use the following command-line format: ispc -O2 -o -h -target= -opt=fast-math b. The full command line used in the sample is: $(ProjectDir).

Thirdparty ispc ispc -O2 '%(Filename).ispc' -o '$(IntDir)%(Filename).obj' -h '$(ProjectDir)%(Filename)ispc.h' -target=sse4,avx2 -opt=fast-math c. Add the relevant compiler generated outputs ie. Obj files: $(IntDir)%(Filename).obj;$(IntDir)%(Filename)sse4.obj;$(IntDir)%(Filename)avx2.obj d. Set Link Objects to Yes. Now compile your Intel SPMD Program Compiler kernel.

If successful, this should produce a header file and an object file. Add the header to your project and include it into your application source code. Call the Intel SPMD Program Compiler kernel from the relevant place, remembering that any functions you export from the kernel will be in the Intel SPMD Program Compiler namespace: Summary The purpose of this article was to show how easily developers can migrate highly vectorized GPU compute kernels to vectorized CPU code by using Intel SPMD Program Compiler; thereby allowing spare CPU cycles to be fully utilized and providing the user with a richer gaming experience. The demonstrated extra performance from using Intel SPMD Program Compiler kernels instead of scalar code is available with very little effort for any workloads that naturally vectorize, and using Intel SPMD Program Compiler reduces development and maintenance time while also enabling new instruction sets to be supported with ease.

US0A1 - System and method for efficiently executing single program multiple data (SPMD) programs - Google Patents Connect public, paid and private patent data with System and method for efficiently executing single program multiple data (SPMD) programs Info Publication number US0A1 Authority US Grant status Application Patent type Prior art keywords job jobs task data simd Prior art date 2003-11-14 Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.) Granted Application number US10714179 Other versions Inventor Stefano Cervini Current Assignee (The listed assignees may be inaccurate. Google has not performed a legal analysis and makes no representation or warranty as to the accuracy of the list.) STMicroelectronics lnc Original Assignee STMicroelectronics lnc Priority date (The priority date is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the date listed.) 2003-11-14 Filing date 2003-11-14 Publication date 2005-05-19 Links.

Images. Classifications.

G— PHYSICS. G06— COMPUTING; CALCULATING; COUNTING. G06F— ELECTRICAL DIGITAL DATA PROCESSING. G06F9/00— Arrangements for programme control, e.g. Control unit. G06F9/06— Arrangements for programme control, e.g. Control unit using stored programme, i.e.

Using internal store of processing equipment to receive and retain programme. G06F9/46— Multiprogramming arrangements. G06F9/48— Programme initiating; Programme switching, e.g. By interrupt. G06F9/4806— Task transfer initiation or dispatching.

G06F9/4843— Task transfer initiation or dispatching by program, e.g. Task dispatcher, supervisor, operating system.

G— PHYSICS. G06— COMPUTING; CALCULATING; COUNTING. G06F— ELECTRICAL DIGITAL DATA PROCESSING. G06F9/00— Arrangements for programme control, e.g.

Control unit. G06F9/06— Arrangements for programme control, e.g. Control unit using stored programme, i.e. Using internal store of processing equipment to receive and retain programme. G06F9/30— Arrangements for executing machine-instructions, e.g. Instruction decode. G06F9/38— Concurrent instruction execution, e.g.

Pipeline, look ahead. G06F9/3836— Instruction issuing, e.g.

Dynamic instruction scheduling, out of order instruction execution. G06F9/3851— Instruction issuing, e.g.

Dynamic instruction scheduling, out of order instruction execution from multiple instruction streams, e.g. Multistreaming Abstract.

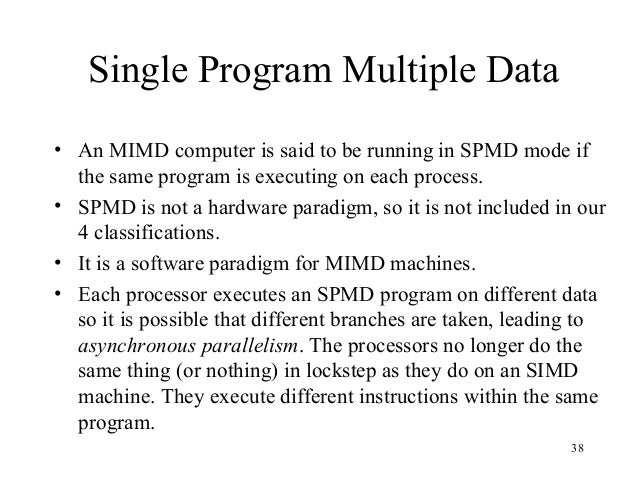

A system and method is disclosed for efficiently executing single program multiple data (SPMD) programs in a microprocessor. A micro single instruction multiple data (SIMD) unit is located within the microprocessor. A job buffer that is coupled to the micro SIMD unit dynamically allocates tasks to the micro SIMD unit. The SPMD programs each comprise a plurality of input data streams having moderate diversification of control flows. The system executes each SPMD program once for each input data stream of the plurality of input data streams. The present invention comprises a new microprocessor architecture for high performance parallel computing.

The present invention is intended to provide high performance at low cost for parallel computing problems characterized by an abundant data level parallelism and a moderate but not negligible diversification of control flows observed during the execution of processes associated with a number of data streams. These types of problems are not efficiently supported by the two main prior art paradigms of parallel computing known as Single Instruction Multiple Data (SIMD) and Multiple Instruction Multiple Data (MIMD). As will be more fully discussed, the present invention introduces a new system and method to more appropriately and efficiently solve this class of problems.

The new system and method is called Single Program Multiple Data (SPMD). The SPMD system and method executes the same program once for each one of the input data streams, possibly occurring in a variety of control flows due to the presence of data dependent control flow constructs in the program. The SPMD system and method contrasts with prior art SIMD paradigms in that the instruction streams are independently generated for each data stream, as opposed to being common to all of the data streams as is the case in the SIMD paradigm. On the other hand, the SPMD system and method differs from the MIMD paradigm in that the independent instruction streams are generated from the same program as opposed to being potentially generated by different programs as is the case in prior art MIMD paradigms. The prior art SIMD category includes parallel problems whose data streams do not produce any control flow diversification, and the prior art MIMD category includes parallel problems whose instruction streams are totally uncorrelated, mostly generated by different programs indeed. It is worth noting that when referring to classes of problems, the SIMD class is a subset of the SPMD class. In turn, the non-SIMD part of the SPMD class is a subset of the MIMD class.

Such non-SIMD SPMD problems that are limited to a “moderate, yet not negligible” control flow diversification represent the class of problems addressed by the SPMD system and method of the present invention. An extension of the SPMD system and method consists in having a number of programs each being executed on a number of input data streams. The number of data streams associated with each program is arbitrary and independent of each other, but a reasonable assumption is that most programs will be executed on a number of data streams significantly larger than a certain threshold. Such a threshold is a characteristic of the problem and refers to the “program granularity” of the problem. Even though the program granularity is a factor to be taken into account when applying the present invention to a specific problem, as long as the program granularity is a reasonably high number (for instance, eight (8) or higher), it does not have any impact over the main concepts of the invention. For this reason, this aspect will not be considered any further. In general the performance of a microprocessor depends upon its ability to keep all of its functional units (FUs) busy most of the time.

This is an ultimate objective of any microprocessor design. An alternative way to state this objective is to state that the objective is to efficiently supply the functional units (FUs) with the operands and control signals they need in order to execute the instructions. The notion of efficiency involves such factors as silicon area, power dissipation and computing speed. The system and method present invention is designed to achieve increased efficiency in these areas.

As previously mentioned, the SPMD system and method does not have a specific hardware counterpart. Known solutions to the execution of SPMD problems employ prior art SIMD machines with enhanced flexibility. Existing architectures of prior art SIMD machines comprise arrays of Processing Elements (PE) to which an instruction stream is broadcast by an Array Control Unit (ACU). At any time, the currently broadcast instruction is executed on each PE using local data (i.e., the data corresponding to the data stream associated with each individual PE). Although the execution of each single instruction is independent of each PE, all of the PEs are required to start the execution synchronously. This dynamic of instruction execution is referred to as the “lockstep execution mode” and it is responsible for the major source of inefficiency of prior art SIMD machines when used to execute SPMD programs. To better explain why this occurs the flow of control of a program execution will first be defined.

Then the program control flow will be related to the source of inefficiency. The execution of an instruction causes a change of the internal state of the machine. The subset of the state that is visible to the programmer is called the architectural state of the machine. The instruction stream is generated by properly fetching instructions from the program compiled code according to the value of the Program Counter (PC). The PC always points to the following instruction in the program order unless the instruction previously executed did not explicitly change it. In a programming language (for example, the programming language C) there are specific constructs that force the PC to take on a different value than the simple sequential increase. In general these constructs contain a logic expression that conditionally (that is, depending on the value of the logic expression) produce a change of the PC.

The program control flow is the sequence of the values taken on by the PC during the program execution. When executing the same program on a number of different data streams it is possible to observe a different control flow for each of the data streams, due to the different values that the logic expressions contained in the branch instructions may take on. The performance attainable by a prior art SIMD machine is greatly impoverished when executing a program on data streams that are significantly different. Intuitively, the explanation of this result is that in the presence of strong control flow divergence, due to the lockstep execution mode, the PEs, alternatively to periods of execution, are forced to stand idle until all the PEs have terminated the execution of the current instruction. These waiting periods are due to the fact that only a portion of the PEs can participate in the execution of the current instruction, depending on whether their respective control flow shares the same instruction being currently broadcast. Prior art Micro-SIMD architectures have been used in instances where the SIMD paradigm has been combined with single chip integration.

Such Micro-SIMD architectures have been demonstrated to offer high efficiency when dealing with a data level parallelism. In a paper by R. Lee entitled “Efficiency of Micro-SIMD Architectures and Index-Mapped Data for Media Processors” published in the Proceedings of Media Processors 1999, IS T-SPIE Symposium on Electric Imaging: Science and Technology, pp. 25-29, 1999, Micro-SIMD architectures are described and compared with the other known parallel architectures such as the MIMD, SIMD, Superscalar and Very Long Instruction Word (VLIW) architectures.

The basic concept involves treating each word of the register file as being completely composed of a number of subwords each containing data valid for a different PE. This concept is illustrated in FIG. 2 the register file is made up of registers containing four subwords.

The register file is shared among all the functional units (FUs) attached to it. When a FU executes an instruction (for example, a multiply instruction) it actually performs four multiplications using as operands pairs of subwords contained in two distinct registers.

The FUs carry out vector operations and can therefore be referred to as “vector FUs” to distinguish them from the “scalar FUs” (the four multipliers in the example) that they are made up of. The number of scalar FUs contained in the vector FUs is referred to as the “size” of the Micro-SIMD unit, and is indicated with the parameter “m” throughout this patent document. The register file is said to be “shared and partitioned” in that each one of the “r” registers is shared along with the FUs axis (each register can be an operand of any FU) but the registers are partitioned into subwords each associated to one and only one of the “m” scalar operators in the FUs. Second, the Micro-SIMD structure is less susceptible to suffer from wire delay problems than other architectures.

The trend of performance of future microprocessors has started to show that the most limiting bottleneck will shift from gate speed to wire delay. In the near future, a single wire will take tens of cycles to cross the entire area of a die, making synchronous single-clocked techniques highly unattractive. In the attempt to avoid long wires, solutions in which the chip area is partitioned in multiple regions each working asynchronously with respect to each other will be favored over fully synchronous solutions. Because of the packed nature of the Micro-SIMD structure, wire lengths are much shorter than what could possibly be obtained with a conventional SIMD architecture.

Nevertheless, it retains the advantages of SIMD machines consisting in a shared control unit. The first solution involves using an “Active Flag Matrix” of bits with as many columns as the number of PEs and as many rows as the number of nested branches supported. The matrix is treated as a Last In First Out (LIFO) stack, growing in the row dimension. Entire rows are pushed in and popped out of the matrix. A new row is pushed into the stack any time a branch instruction is encountered, while it is popped out whenever an “endif” instruction is executed. At any time the row on top of the matrix (the one last pushed in) represents the activation mask of the PE array, in particular the “n-th” bit of the last row represents the activation status of the “n-th” PE. This technique poses a limit to the maximum level of nested branches allowed in the program.

The branching problem has its roots in the lockstep execution mode. Therefore, the branching problem is generated by the very nature of SIMD machines. Another problem that arises from executing SPMD programs on SIMD machines applies only to the specific class of Micro-SIMD architectures. This problem relates to the write-back stage of the execution pipeline. In a register-to-register architecture, instructions require the availability of one source operand (for unary operations) or two source operands (for binary operations) from the register file and write the result of the operation back to a destination address of the register file.

Because of the partitioned structure of the register file, every register contains data for all of the PEs. This is illustrated in FIG. For this reason two reads of the register file provides all the data for the SIMD instruction. When an instruction is to be executed for only a portion of the PEs (i.e., when the activation mask reveals some inactive PEs), then the write-back of the result of the instruction in the destination register has to be selectively applied only to the subwords associated with the active PEs. Otherwise, a full register write (i.e., not selective) would possibly override the data (which is still valid) associated with the subwords of the inactive PEs. 3 illustrates the logic that must be added to the data path of the PEs in order to prevent this problem from occurring.

The disadvantages of this solution are represented by the additional area required by the register to store the previous content of the register, the logic of selective write (muxes), and an extra read port in the register file. Moreover, due to the additional read port, the register-file access time increases, and possibly exceeds the cycle time of the clock.

The drawbacks mentioned above apply to every functional unit of the PEs. For example, with N functional units (FUs), N additional read ports (3N in total) are necessary, resulting in a total area overhead that is N times what is described above. An alternative solution involves renaming the registers within the “else” branch and subsequently merging them into the original ones (i.e., those referred to in the “then” branch). In this technique the destination registers are always written in full, and that is in all of their subwords, but only some of the subwords actually contain valid data. The PEs that are active in the “then” branch provide a subset of the valid words of the destination register, and the PEs that are active in the “else” branch provide the remaining subwords.

Because the destination registers in the “else” part are renamed, there is no overlapping of the data that was previously written during the “then” branch. Outside of the “if” statement (i.e., after the corresponding “endif” instruction is encountered), the renamed registers must be merged into the original registers. This merging operation could be done by having the compiler insert, after the “else” branch, some “merge” instructions, one for each register that has been renamed. The “merge” operation has three operands, the first two being the original and renamed registers respectively, and the third being the value of the active flag register as it was at the beginning of the “else” branch. The result of the “merge” operation is a full word whose subwords are taken from either the first or the second operand depending on the value of the active flag register. In particular, if the “n-th” bit of the active flag register is one (“1”) then the “n-th” subword of the result of the “merge” operation is the “n-th” subword of the second (renamed) register, otherwise it is the “n-th” subword of the first register. The cost associated with this solution is in terms of both the additional registers needed to support the renaming as well as the additional cycles needed to carry out the “merge” instructions.

The higher numbers of registers needed implies both a larger silicon area and possibly a longer access time, which in turn possibly leads to a longer cycle time. It is therefore seen that the two prior art techniques to support SPMD execution on a Micro-SIMD machine suffer from higher area cost and possibly poorer performance. To correctly manage the write-back stage into the register file after the execution of an instruction, two techniques have been described. The disadvantages of each of these two techniques are summarized in TABLE TWO.

TABLE TWO SELECTIVE WRITE-BACK STAGE REGISTER RENAMING Additional Read Port Greater Number of Cycles Larger Area Larger Area Longer Cycle Time Longer Cycle Time. The impact over the cycle time depends on the properties of the other parts of the data path design. The cycle time has to be longer than the shortest electrical path in the design (the critical path). To avoid a cycle time penalization, one prior art technique involves splitting the critical path in two parts and pipelining it. This technique leads to superpipelined architectures.

In this way the critical path is moved to another electrical path in the design that hopefully is much shorter than the previous path. The disadvantages of pipelining techniques include more difficult control and larger areas, so that the cost/benefits of this technique have to be traded off in the context of the entire design. Possibly global optimization considerations would favor an increase of cycle time. Simultaneous Multi-threading (SMT) is a microprocessor design that combines hardware multi-threading with superscalar processing technology to allow multiple threads to issue instructions each cycle. Supporting SMT in a SPMD execution mode means that each slot of the instruction issue can be occupied by instructions belonging to different threads. The SMT paradigm does not impose any restrictions as far as which slots a particular thread is allowed to occupy.

In general any thread can occupy any slot, even though methods for preventing thread starvation and guaranteeing thread balance are required. Therefore, although these methods will try to avoid that a single thread will systematically take hold of all the instruction slots, it is possible that this situation will occasionally occur. Moreover, the same thread can be assigned in different cycles to different slots. In order to overcome the register write-back problem that was previously described, the data path modifications of FIG. 3 need to be further extended as illustrated in FIG. 4 and in FIG. 4 illustrates data path modifications to a prior art Micro-SIMD architecture that are needed to support execution of single program multiple data (SPMD) programs in a simultaneous multi-threading (SMT) environment.

5 illustrates a more detailed version of the modified Micro-SIMD architecture shown in FIG. The present invention comprises a job buffer and a micro single instruction multiple data (SIMD) unit within a microprocessor. An output of the job buffer is coupled to an input of the micro SIMD unit. The job buffer dynamically allocates tasks to the micro SIMD unit. The micro SIMD unit sends job status information back to the job buffer to enable the job buffer to efficiently allocate the tasks. The SPMD programs executed by the system and method of the present invention each comprise a plurality of input data streams having moderate diversification of control flows.

The system executes each SPMD program once for each input data stream of said plurality of input data streams. The foregoing has outlined rather broadly the features and technical advantages of the present invention so that those skilled in the art may better understand the detailed description of the invention that follows. Additional features and advantages of the invention will be described hereinafter that form the subject of the claims of the invention. Those skilled in the art will appreciate that they may readily use the conception and the specific embodiment disclosed as a basis for modifying or designing other structures for carrying out the same purposes of the present invention. Those skilled in the art will also realize that such equivalent constructions do not depart from the spirit and scope of the invention in its broadest form. Basic Definitions.

(1) “Job”: A job is a combination of a program and an input data-set. The same program may be instantiated with a number of different input data-sets, thus generating different jobs. Throughout this patent document it will be assumed that the execution of jobs generates asynchronous processes, wherein no inter-job synchronization is required. Jobs are indicated with the notation j l (P) where the superscript letter “P” is the associated program of the job and the subscript letter “l” refers to a given enumeration of the input data-sets of the program P. (3) “Job Equivalence”: Given two jobs associated with the same program, the two jobs may reveal identical portions of their instruction streams (i.e., identical subsequences of their sequences of PC values). 7 the jobs j 1 and j 2 have two of these identical substreams, graphically represented by means of the same number within each substream.

When executing the two jobs, the beginning of each substream is annotated with the time stamps t 1 (1), t 2 (1), t 3 (1) and t 1 (2), t 2 (2), t 3 (2) respectively for the jobs j 1 and j 2. Two jobs are said to be equivalent if they are beginning the execution of an identical substream at the same time.

For example, FIG. 7 illustrates jobs that have two opportunities to be equivalent: when executing the first substream if t 1 (1)=t 1 (2) and when executing the third substream if t 3 (1)=t 3 (2). Note that if the first job completes the execution of the second substream before j 2, it still has the opportunity to regain synchronization with j 2 in inserting a required waiting period (as illustrated in FIG. By doing so, the jobs j 1 and j 2 are again equivalent at time t 3=t 3 (1)=t 3 (2).

(7) “Task Instruction Stream”: Because the jobs bundled in a task are, by definition, equivalent then the instruction stream of the jobs can be collectively referred to a single task instruction stream. Task instruction streams are graphically illustrated in FIG. 6 by means of lines within the tasks. The example shown in FIG. 6 illustrates six (6) tasks.

Each task comprises four (4) jobs. A task terminates as soon as the task instruction stream encounters a certain pre-defined instruction that is referred to as a “code-stop.”.

“Equivalent Tasks”: Tasks are said to be equivalent if they have the same task instruction stream. Equivalent tasks respectively bundle disjoint subsets of the same job cluster, and they can be allocated to the Micro-SIMD unit either sequentially or (asynchronously) in parallel. Because instructions are independently fetched from task instruction streams even when they are equivalent, the number of task instruction streams simultaneously alive always corresponds to the number of tasks being concurrently executed.

States of Job Execution. 11 illustrates a state diagram showing how the execution of a job may be described in terms of states and transitions between states. Each state represents a different physical memory where the context of the job resides, so that it mirrors a context-switch subsystem (which will be described later). The transitions between states represent the operations associated with the moving of part of the entire job context in order to bring it closer to the functional units. Subsequently a job is admitted into the system. That is, its context is brought into the on-chip memory where it will always reside thereafter.

At this point the job is in an “idle” state 1120 and can be scheduled for execution. It is assumed that the number of jobs in the “idle” state 1120 is greater than the number of jobs that can be simultaneously executed. For this reason, at any time only a portion of the “idle” jobs are being executed and the others remain in a “wait” state (which will be described later), waiting for a slice of execution. 12A illustrates a refinement of the “idle” state 1120 of FIG. The first time the jobs are brought into the system they are said to be in an “admitted” state 1220. After having been executed for a slice of execution (in “active” state 1130), the jobs are temporarily kept on hold in a “hold” state 1230. In the “hold” state 1230 the jobs release the hardware resources that they were using and compete with the others in order to regain their possession.

Because all of the “idle” jobs compete for the same execution slots, a job can either succeed or fail in obtaining a slot. Therefore, a job in a “hold” state 1230 can either be rescheduled for another slice and become “active” again (in “active” state 1130) or enter into a “wait” state 1240. New opportunities for the jobs in the “admitted” states 1220 and in the “wait” states 1240 to become active are contingent on the termination of a task. At that point the jobs that make up the task just terminated enter a “hold” state 1230, release the executions slots, and those that were in an “admitted” state 1220 and in a “wait” state 1240 can compete for the slots just released. The concept of code-stops and how code-stops intervene in the formation of tasks will now be discussed. A code-stop is any one of a number of predefined (program dependent) Program Counter (PC) values that cause a task to terminate. Equivalently, code-stops cause jobs to change their state from “active” to “hold.” A code-stop is represented by a mark in the program code, or, equivalently, by a value of the Program Counter (PC).

In the pseudo-code examples set forth below, double-line arrows mark the instructions where a code-stop is placed. When executing a task the Program Counter (PC) is shared by all the PEs in the Micro-SIMD array 920. As soon as the Program Counter (PC) matches the value of a code-stop, the task is forced to terminate after the completion of the current instruction and the result of the code-stop is used to independently update the PC for each PE. The possible values that the locally updated PCs might have as a result of the independent PC updating are marked by single-line arrows in the following pseudo-code examples.

This means that a code-stop has been encountered, the lockstep mode of execution is temporarily abandoned, in that each PE is allowed to update its own copy of the PC as if it were executing in scalar mode. As has been previously mentioned, code-stops force the termination of the task at points where the control path of the job might fork. This prevents any PE-array underutilization from occurring. On the other hand, a critical aspect of this mechanism is the number of instructions that a task embraces (i.e., the number of instructions between two consecutive code-stops). In fact, due to the overhead of control operations involved in the allocation of new tasks, the longer the task duration, the better the overhead can be hidden.

The pseudo-code example in TABLE SIX illustrates this principle. Whenever the two branches of an “if” conditional statement have the sole purpose of calculating the values of subexpressions of a bigger expression (TABLE SIX (a)), both the sets of subexpressions can be calculated and then the correct set selected according to the value of the Boolean expression in the conditional. In TABLE SIX (b) the code of TABLE SIX (a) is transformed to explicitly calculate the subexpressions in both of the branches.

In TABLE SIX (c) the “select” instructions are inserted. The semantic of the “b=select (p,b1,b2)” instruction is as follows: If “p” is one (1) then assign “b1” to “b”, else assign “b2” to “b”. If the two branches are asymmetric in that some variables are set in only one of the branches and are alive outside the scope of the conditional statement, then this expression should be guarded and executed by using predictive execution techniques.

The code-stop reduction trades off possible additional computation for less frequent task allocations, so it must be selectively applied to the control flow statements of the job programs depending on the characteristics on their bodies. TABLE SIX a b c 1. Because the Program Counter (PC) gets sequentially updated between two consecutive code-stops, the entire control path of a job is fully represented by the sequence of the statuses taken on after the execution of all of the encountered code stops. The notation c s indicates that the status “s” is taken on immediately after executing the code stop “c”.

The execution of a job can then be represented by: j l (P)=( s l,1,c l,1 s l,2,c l,2 s l,3,.,c l,L l −1 s l,L l) where L l is the number of statuses visited by the job j l (P) and ∀n, c l,nεC(P). The group of instructions between two code-stops (including the last instruction) is called a “basic block.” While the execution of a job progresses, the job is indicated by the sequence of the statuses that are yet to be visited. For example, a job that has already executed the first “n minus one” (n−1) basic blocks is indicates with the expression: j l (n)=( s l,n,c l,n s l,n+1,c l,n+1 s l,n+2,.,c l,L l −1 s l,L l) The status of the job (i.e., job status) rules the job clustering process and, consequently, as will be more fully described, the instantiation of the tasks.

The central concept of the present invention is a mechanism to “dynamically” bundle the jobs to be executed on the basis of their control flow equivalence. The overall result is the allocation to the Micro-SIMD unit 920 of “ideal” tasks. That is, pieces of computations that, sharing the same control flow, are immune to the problem of SIMD array underutilization caused by control flow divergence among the executing jobs. In order to guarantee that the jobs being jointly executed on the Micro-SIMD unit 920 always share the same control flow, the operation of task allocation must be performed before any control flow divergence can take place, or, in other words, when a code-stop is encountered. From the job buffer 910, jobs are selected for the formation of tasks to be dispatched to the Micro-SIMD unit 920. 13 illustrates this concept.

The tasks being executed release the Micro-SIMD unit 920 (and the participating jobs enter the “hold” state 1230) at the next code-stop, thereby allowing an update of the clustering structure of the job buffer 910. The preemptive nature of task execution is the key to continuously assure that at any time the best-fit tasks take hold of the Micro-SIMD unit 920. 14 illustrates a graph in which the nodes represent job statuses and the arcs represent possible status transitions. Each job exhibits its own path of status transitions, so that in a MIMD execution scenario (where the control flows of the jobs are independently handled) it is not possible to predict “a priori” in which node each job is located at a given time.

Different jobs can actually scatter over all of the nodes. Job clustering assures that only jobs in the same status (node) are allocated to the Micro-SIMD unit 920. In addition to overcoming the inefficiencies generated by a poor array utilization, a dynamic job clustering and allocation also allows a Micro-SIMD unit 920 to easily support SMT.

In fact, as previously explained, supporting SMT in a system where a thread is the execution of an SPMD program is made difficult by the fact that a given PE can be, independently with respect to the other PEs and simultaneously, “active” for some threads and “inactive” for others. With the technique of job clustering this problem disappears as each thread will be “active” by construction on each PE. The cost in terms of additional silicon area that the implementation of these mechanisms require is tolerable. As far as the benefits are concerned, the benefits depend on the particular characteristics of the applications. As anticipated in the introduction, for Multi-SPMD problems this approach will produce a level of performance far higher than what would otherwise be attainable with other known approaches. For problems not belonging to the Multi-SPMD category other architectures are preferable to the present one.

With respect to the second requirement, the probability of generating ideal tasks changes, as a consequence of admitting new jobs, depending upon how close are the control flows of the new jobs both respect to each other (leading to the immediate generation of ideal tasks) and subsequently with respect to the jobs whose execution is already on-going. In order to meet the second requirement, it is assumed that the client application sends to the job buffer jobs prioritizing those that are associated with the same program and, where possible, adopting application-dependent inter-jobs control path metrics.

The number of jobs that the job buffer 910 contains is critical to the overall performance of the invention. A larger buffer provides better opportunities to find ideal tasks for the Micro-SIMD unit 920.

On the other hand, a larger buffer costs more in terms of silicon area and the time to perform the job clustering operation. The time grows as the order of log(J) (i.e., O(log(J)), where J is the number of jobs maintained in the job buffer 910. The optimal job buffer size depends on the characteristics of the typical computing workload conditions that are being targeted. In one advantageous embodiment of the invention the value of J is four (4) times the size of the Micro-SIMD unit 920. That is, J=4 m where “m” is the size of the Micro-SIMD unit 920.

Job Buffer Maintenance. It is seen that the transformation “jobclustering” performs a re-partition of the set of “idle” jobs I whenever there is a change of the collective status information of the “idle” jobs.

It is therefore very important to maintain consistency between the content of the job buffer 910 and the actual collective status of the processing of the jobs. To this purpose the events are identified that need to be handled in order to keep job buffer consistency. Based on the possible state transitions of the idle jobs, there are three ways to alter the set I. A specific event is associated with each state transition. Each of the specific events causes a group of jobs to flow in or flow out of the set I. Virtual Processing Element Emulation. In order to maximize the sizes of the job clusters the jobs that have a possibility of reaching the status of the other tasks should be executed first.

An “alive” status s is said to be “complete” if it is not reachable by any other job in the job buffer 910. In other words, a status s is complete if the upstream code stop of s (i.e., c s) cannot be reached by any possible control path of any job in the job buffer 910. This means that the job cluster s F cannot grow any further, so that jobs from this cluster can be immediately executed with no impact on performance. Each job loops over the idle-scheduled-ready-executing loop for a number of times before terminating.

A task exists only between the states “scheduled,” “ready,” and “executing.” The states “idle” and “terminated” relate to job states only. The task states (“scheduled”, “ready”, and “executing”) correspond to the single job state “active”, which means that while a job is “active” the task it belongs to can be in either the “scheduled,” “ready,” or “executing” state. A task is born when it enters the “scheduled” state and terminates either when the participating jobs terminate or when they enter the “idle” state. To model the conditions that enable the task state transitions, generic “slots” associated with the states are used.

A number of slots are initially available to each state. When entering into a state, a task occupies one of such slots, while when exiting the state it frees up one slot. A state transition is then allowed whenever an empty slot in the destination state is available.

A dashed line in FIG. 16 illustrates the release of slots that the progress of a task has made available to the other tasks. For a task to become “scheduled” and “ready”, two operations need to take place first.

The first operation is the operation of “task generation” (which has been previously explained). Pdms sample project. The second operation is the operation of “data loading” (which will be subsequently explained). In other words, a task needs to be selected by the task generation procedure for the task to perform the “idle-scheduled” transition, and the task needs to load its input dataset for the task to perform a “scheduled-ready” transition. Data Loading. When the input dataset of a task is readily available (i.e., stored in the register file) the task itself can start the execution without incurring the time consuming operations of memory reads. Even though the execution can actually start before having the entire input dataset transferred into the register file, because of the particularly large amount of data to read that a Micro-SIMD architecture has to cope with (m times the size of a dataset for a single job for an m-ary task) a mechanism to hide the data loading time is necessary.

For this purpose the execution of a task is decoupled from the data loading of the task. A number of “execution slots” and an equal number of “ready-task slots” represent respectively the hardware resources that are collectively associated with the execution phase and the data loading phase of the life of a task. A task becomes ready for execution when its input dataset is transferred to the register file attached to the Micro-SIMD unit 920 that the task is going to be executed on. Data loading hiding is accomplished by initiating data loading concurrently to the execution of other tasks.

In this way, ideally, when a task terminates its execution, the input data for the next task is already located in the register file thereby allowing an uninterrupted instruction flow to the Micro-SIMD unit 920. In other words, the transition “scheduled-ready” of a task needs to complete before the transition “executing-idle” of the previous task.

In the case of multiple running tasks (e.g., in a SMT environment) the condition generalizes in requiring that at each transition “executing-idle” there is at least one “ready-task slot” available. Because the jobs are bundled at run time the input dataset must be initially read on a “per job” basis. Only after all of the input datasets of the participating jobs have been written into the register file are the jobs physically bundled. Thereafter, the datasets of the jobs can only be jointly accessed from packed registers as opposed to being individually accessible.

A restricted region of the register file is reserved for non-packed registers, in which the load of the input datasets can take place. This region is called an “Input buffer” (or, equivalently, an “I-buffer”).

I-buffers represent an implementation of the ready-task slots. To speed up the data loading phase an additional write port may be added to the I-buffers.

Executing a data loading phase for a task before executing a task execution phase for said task.